Introduction

Customer segmentation is an essential part of the modern world of marketing and business. Through customer segmentation, Entrepreneurs can not only understand their target audience but also develop personalised and efficient marketing strategies.

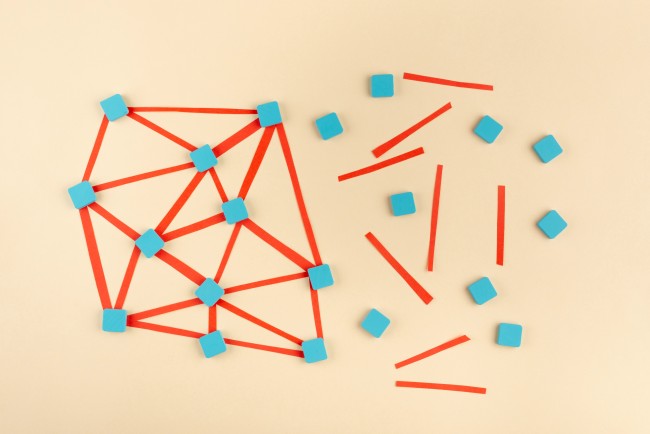

Customer Segmentation is the process of dividing customers into separate groups based on similar attributes, which include demographics, psychographics, behaviour patterns, and purchase habits.

Data scientists can use different tools to analyse results of customer segmentation.

Today Diana Cherkezian will help us observe cutting-edge clustering techniques for high-accuracy customer segmentation.

Diana is a Senior Data Analyst with a broad range of experience: from executing extensive research based on statistical analysis, experiments (A/B tests and quasi-experiments), models, and delivering recommendations to developing analytical datasets using SQL/Python and creating data visualisations to ensure sustainable business growth.

Business Value

Clustering analysis in customer segmentation provides a deep understanding of customer characteristics and behaviours, enabling businesses to work with their target audiences more effectively.

For instance, understanding different customer clusters can highlight which groups are more likely to discontinue services, enabling proactive measures to retain them.

Companies can also develop their pricing models to maximise profitability and customer satisfaction through recognition of different price sensitivities across the audience, Diana believes.

Advanced Clustering Techniques for Customer Segmentation

The clustering method has several advantages compared to traditional segmentation methods. The following tools are more accurate and flexible.

1. Density-Based Spatial Clustering (DBSCAN)

DBSCAN (Density-Based Spatial Clustering of Applications with Noise) is the most well-known density-based clustering algorithm. It is based on this intuitive notion of ‘clusters’ and ‘noise’.

The key idea is that for each point of a cluster, the neighbourhood of a given radius has to contain at least a minimum number of points. DBSCAN does not require the number of clusters as a parameter.

Rather it infers the number of clusters based on the data, and it can discover clusters of arbitrary shape. These features make DBSCAN one of the best tools for working with ‘irregular’ clusters.

We can describe the algorithm of DBSCAN intuitively. For example, we can see a crowd of people. Some of them chatter in teams, dance, others stand alone. We want to identify several groups in this crowd, but it’s problematic because groups are of different sizes and we have lonely “outsiders”.

Here’s the way to do it: count people who have at least three nearby neighbours—they will be core points. Then, count people who have fewer than 3 neighbours and one of those neighbours is a “core point”.

These will be “border points”. Finally, count people who have fewer than three neighbours, and no “core points” among them, only “border points” or no one else. These outsiders will be “outliers” or “noise points”.

You’re done! “Core points” people identify a separate cluster, “border point” people can be considered part of whichever cluster is most convenient for you, and “outliers” are individualists, they don’t belong to any cluster.

Advantages of DBSCAN

- Perfect to work with anomalous clusters.

- The perfect tool to simulate customers’ behaviour.

- The complexity of the dataset elements doesn’t matter.

- Can be mixed with other tools, like K-means or GMM.

Disadvantages of DBSCAN

- Difficult to incorporate categorical features.

- Requires a drop in density to detect cluster borders.

- Sensitive to scale.

- Struggles with high dimensional data.

There’s one of the different modifications of DBSCAN — Hierarchical Density-Based Spatial Clustering (HDBSCAN).

HDBSCAN is especially helpful for datasets with complex structures or varying densities because it creates a hierarchical tree of clusters that enables users to examine the data at different levels of granularity. This modification is focused on the high density of data and has the parameter of minimum cluster size.

2. Deep Clustering (e.g., Autoencoders + K-means)

K-means clustering is an algorithm that assigns data points to one of the K clusters depending on their distance from the centre of the clusters. This algorithm is iterative, which means that centres of clusters are reassigned each time. K is the assigned number of clusters needed.

Advantages

- Fast

- Easy to implement

- Easy to be modified and combined with other algorithms

Disadvantages

- Works best with well-separated data.

- Sensitive to noise.

- Doesn’t provide clear information about the quality of clusters.

Due to great compatibility, K-means are used with Autoencoders to perform deep clustering. Autoencoders are artificial neural networks used to learn data encodings in an unsupervised manner.

Autoencoders reduce higher dimensionality by training the network to capture the most important parts of the input image. Autoencoders consist of 3 parts: encoder, bottleneck, and decoder. Encoder is a compressing module, used to make data sets smaller.

Bottleneck is a module that contains the compressed knowledge representations. The decoder decompresses the knowledge representations and reconstructs the data back from its encoded form respectively.

3. Gaussian Mixture Models (GMM)

Gaussian Mixture Models are a probabilistic model for representing normally distributed subpopulations within an overall population.

Mixture models in general don’t require knowing which subpopulation a data point belongs to, allowing the model to learn the subpopulations automatically. Since subpopulation assignment is not known, this constitutes a form of unsupervised learning.

Advantages of GMM

- The fastest algorithm for learning mixture models

Disadvantages of GMM

- Needs theoretical criteria to decide how many components to use in the absence of external cues

GMM can be easily modified and implemented in different algorithms like k-means. GMM-UBM has been used recently for feature extraction from speech data for speech recognition systems.

4. Spectral Clustering

Special Clustering is an EDA technique that makes smaller clusters with similar data out of complex multidimensional datasets. It uses eigenvalues and eigenvectors of the data matrix to forecast the data into lower dimensions space. Then it can be easily segregated to form clusters.

Spectral clustering takes four steps: building the similarity graph, then projecting the data onto a lower dimensional space, decomposition into eigenvalues and eigenvectors, and, finally, clustering the data.

Advantages of Spectral Clustering

- Can handle non-linear cluster shapes

- Reduces the dimensionality of the data

- Fast

Disadvantages of Spectral Clustering

- Requires significant memory

- Requires a specified number of clusters beforehand

5. Self-Organizing Maps (SOM)

Self-organizing Maps help to visualise high-dimensional data. This technique represents the multidimensional data in a two-dimensional space using the self-organising neural networks. The main task of SOMs is to make information easier to comprehend through visualisation without data loss.

Advantages of SOMs

- Can work with several classifications and provide a comprehensive and valuable summary at the same time

- Reduced data dimensionality and clustering

- No data loss

- Easier visualisation

Disadvantages of SOMs

- Slow

- Requires one-type data

- High computational costs

Steps and Evaluation

The techniques and algorithms mentioned above require a data set to analyse. I recommend gathering information about your customers into a data set. After that, it is possible to run this data set through special engineering programs using chosen algorithms.

Assess the pros, cons, and compatibility of clustering techniques to your exact data type. The most popular websites for IT specialists contain easy explanations and code examples for all mentioned algorithms. However, evaluation of results can be challenging.

Here are some suitable clustering metrics:

1. Silhouette Score

Silhouette Score is used to assess a dataset’s well-defined clusters using a quantitative approach. Better-defined clusters are indicated by higher scores, which range from -1 to 1.

An object is said to be well-matched to its cluster and poorly-matched to nearby clusters if its score is close to 1. A score of about -1, on the other hand, suggests that the object might be in the incorrect cluster.

The Silhouette Score is useful for figuring out how appropriate clustering methods are and how many clusters are best for a particular dataset.

2. Davies-Bouldin Index

Davies-Bouldin Index helps choose the best clustering solutions for a variety of datasets by offering a numerical assessment of the clustering quality.

Better-defined clusters are indicated by a lower Davies-Bouldin Index, which is determined by comparing each cluster’s average similarity-to-dissimilarity ratio to that of its most similar neighbour.

Since clusters with the smallest intra-cluster and largest inter-cluster distances provide a lower index, it aids in figuring out the ideal number of clusters.

3. Purity

Purity evaluates the extent to which a cluster belongs to a class. It involves assigning a cluster to a class that is the most frequent in the cluster and then counting the number of correctly assigned data points per cluster, taking the sum over all clusters, and dividing the value by the total number of data points.

A purity of 1 indicates good clustering and a purity of 0 indicates bad clustering. The higher the number of clusters, the easier it is to have a high-purity value

4. Adjusted Rand Index (ARI)

ARI is a metric that compares findings from segmentation or clustering to ground truth to assess how accurate the results are. Higher values of the index imply better agreement.

The Adjusted Rand Index is reliable and appropriate in situations when the cluster sizes in the ground truth may differ. This metric works correctly with different amounts of clusters and datasets.

Case Study: K-means Clustering for E-commerce Customers Segmentation

Techgadgets.ai is an e-commerce platform specialising in electronic devices and accessories. This company makes in-depth product reviews, insightful articles, and comprehensive guides.

TechGadgets.ai implements k-means clustering and Silhouette Score to create personalized product recommendations based on purchasing behavior and preferences. After creating the data set the company decided to use the following features for clustering:

- Total spending

- Purchase frequency

- Average time spent on product categories

- Number of different product categories purchased

Silhouette Score showed that 5 clusters was an optimal number, so the K-means algorithm was applied with 5 clusters. After that, TechGadgets.ai identifies five customer segments:

- Tech Enthusiasts

- Budget-Conscious Shoppers

- Occasional Buyers

- Casual Browsers

- New Users

After that TechGadgets.ai can tailor personal recommendations for each segment, e.g. tech enthusiasts receive the latest updates of gadgets and accessories based on their previous purchases, new users receive welcome emails with personalised recommendations based on their profile information and tips on navigating the site and, so on.

Conclusion

The world of advanced clustering technologies and algorithms makes it possible to create a personalised and easy-to-comprehend analysis of customers.

The modern trend towards personalization of user experience dictates new rules: without the implementation of such technologies, the company risks losing relevance in the market.

Moreover, companies’ advertising campaigns show better efficiency with customer segmentation: higher retention and conversion rates are expected.